References

Aaronson, S A, “What are we talking about when we talk about digital protectionism?”, World Trade Review, vol 18, no 4, October 2019.

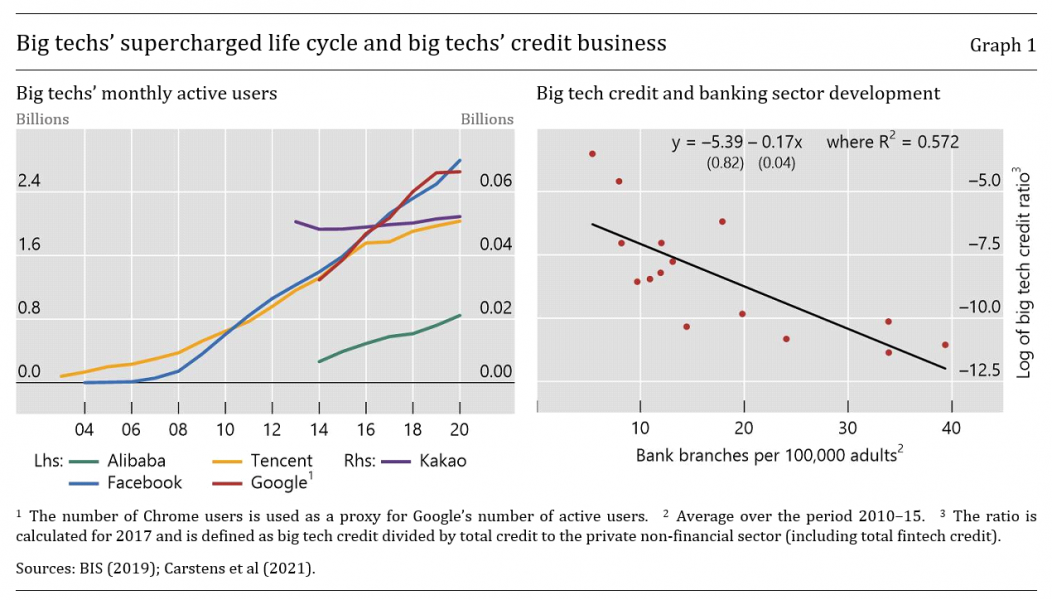

Bank for International Settlements (BIS), “Big tech in finance: opportunities and risks”, Annual Economic Report 2019, Chapter III, June 2019.

Boissay F., T Ehlers, L Gambacorta and HS Shin, “Big techs in finance: on the new nexus between data privacy and competition”, BIS Working Paper Series, no 970, October 2021.

Carrière-Swallow, Y and V Haksar, “The economics and implications of data: an integrated perspective”, IMF Departmental Papers, vol 19, no 16, 2019.

Carstens, A, S Claessens, F Restoy and H S Shin, “Regulating big techs in finance”, BIS Bulletin, No 45, 2021.

Chen S, S Doerr, J Frost, L Gambacorta and H S Shin, “The fintech gender gap”, BIS Working Papers, no 931, March 2021.

Cornelli, G, J Frost, L Gambacorta, R Rau, R Wardrop and T Ziegler, “Fintech and big tech credit: a new database”, BIS Working Paper Series, no 887, September 2020.

Crisanto J C, Ehrentraud J, Lawson A and F Restoy, “Big tech regulation: what is going on?”, FSI Insights on policy implementation, no 36, September 2021.

Croxson, K, J Frost, L Gambacorta and T Valletti (2021), “Platform-based business models and financial inclusion”, BIS Working Paper Series, forthcoming.

Cyberspace Administration of China, “Security Assessment of Cross-border Transfer of Personal Information”, June 2019.

Farboodi, M, R Mihet, T Philippon and L Veldkamp, “Big data and firm dynamics”, NBER Working Papers, no 25515, January 2019.

Financial Times, “How top health websites are sharing sensitive data with advertisers”, 13 November 2019, www.ft.com/content/0fbf4d8e-022b-11ea-be59-e49b2a136b8d.

Frost, J, L Gambacorta, Y Huang, H S Shin and P Zbinden, “BigTech and the changing structure of financial intermediation”, BIS Working Papers, no 779, April 2019.

Fuster, A, P Goldsmith-Pinkham, T Ramadorai and A Walther, “The effect of machine learning on credit markets”, VoxEU, 11 January 2019.

Hau, H, Y Huang, H Shan and Z Sheng, “Fintech credit, financial inclusion and entrepreneurial growth”, mimeo, 2018.

Huang, Y, C Lin, Z Sheng and L Wei, “Fintech credit and service quality”, mimeo, 2018.

Mitchell D and N Mishra, “Regulating cross-border data flows in a data-driven world: how WTO law can contribute”, Journal of International Economic Law, vol 22, no 3, September 2019.

Nguyen Trieu, H, “Why finance is becoming hyperscalable”, Disruptive Finance, 24 July 2017.

O’Neil, C, Weapons of math destruction: how big data increases inequality and threatens democracy, Broadway Books, 2016.

Petralia, K, T Philippon, T Rice and N Veron, “Banking Disrupted? Financial Intermediation in an Era of Transformational Technology”, Geneva Reports, no 22, 2019.