This Policy Brief is based on IFC Report No 18 (2025a). The views expressed are those of the authors and do not necessarily reflect the views of the BIS, the Central Bank of Chile (CBC), the IFC or its members. The authors are grateful for comments and suggestions from Juan Pablo Cova, Robert Kirchner, Michael Machuene Manamela, Alberto Naudon and participants at the 4th IFC-Bank of Italy Workshop on “Data Science in Central Banking” (February 2025).

Abstract

This policy brief summarises the key findings of a recent survey by the Irving Fisher Committee on Central Bank Statistics (IFC) of the Bank for International Settlements (BIS) on the implementation and governance of artificial intelligence (AI). This technology has become strategically important for central banks, with the main question being how to use it effectively and responsibly to support their various tasks and better guide decision-making. Central banks’ experience shows that AI deployment is being complemented with adequate and robust governance, especially to ensure the development of effective user-focused applications and mitigate the associated risks. But AI implementation also brings several IT challenges, including the pressing yet costly need to access more computational power. Looking ahead, making further progress on data management, governance and infrastructure, as well as ensuring that society can keep accessing high-quality reference information appear to be key priorities to make the most of AI opportunities.

Artificial intelligence (AI) can hold significant potential for central banks to support their various tasks and better guide decision-making. This technology enables leveraging the increasing availability of computational resources to address tasks that traditionally require human intervention (FSB (2017)). Indeed, central banks have already implemented AI in their workflows for many years, especially with machine learning (ML) – a subset of AI based on complex algorithms trained on vast amounts of data – with benefits for economic analysis, research and statistics (IFC (2015, 2022)).

The recent advent of generative AI – a class of AI that learns the patterns and structure of input content such as text, images, audio and video (“training data”) and generates new but similar data without requiring much user expertise – has pushed the adoption of this technology even further. Large language models (LLMs) in particular are a prominent category of generative AI able to produce human-like sentences. Increasingly, central banks have begun experimenting with these new tools, ranging from chatbots to assistants for coding and data analytics.

Despite its potential benefits, the embrace of AI raises three main questions. The first one relates to its responsible and safe use as the technology is not free of limitations and risks. Dealing with these challenges puts a premium on setting up adequate governance and risk management frameworks. Second, the development and deployment of AI may require the adaptation of existing IT systems. Key issues include access to sufficient computational resources, the availability and reliability of data platforms, and the adoption of cloud services, which may raise trade-offs in terms of performance, security and sovereignty. Third, making the most of AI calls for securing the dissemination of high-quality, machine-readable, transparent and well documented data.

This policy brief outlines central banks’ experiences in implementing and governing AI based on an IFC survey conducted at the end of 2024 across 60 jurisdictions. It reviews the current state of AI adoption, with a focus on its governance and impact on IT systems. It concludes with a number of lessons from central banks’ experience to harness the benefits of AI in the evolving data and technological landscape.

Central banks are expressing a growing interest in AI, with many planning to increase the budget for related projects in the coming years. There are many reasons why central banks place this technology high on their agenda. These include its versatility to support a broad range of activities in central banking, from textual and statistical data processing and analysis to macroeconomic and financial monitoring. Additionally, AI can significantly augment software programming capabilities and help automate existing workflows, paving the way for potential efficiency and productivity gains.

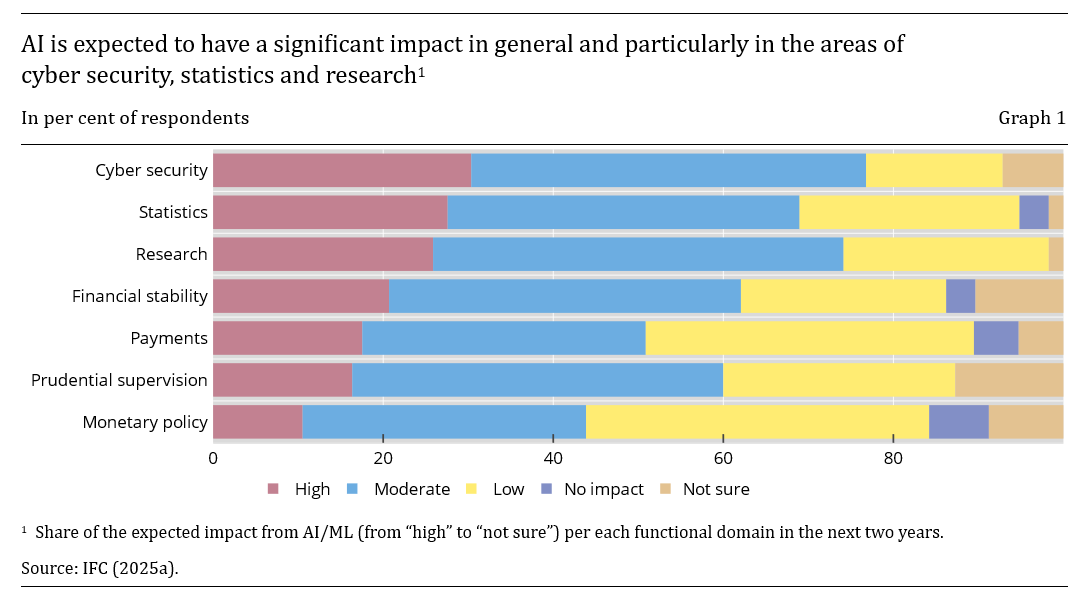

Indeed, central banks expect significant benefits from AI to support their various tasks. Opportunities in the areas of cyber security, research and statistics are judged to be the most valuable (Graph 1). Specifically, AI can assist economic research, for instance with mathematical analysis, summarising literature, writing and explaining software code, and helping to edit drafts (Kwon et al (2024)). Central banks also expect AI to support their statistical function, for instance to manage quality processes, generate synthetic data and improve documentation. More broadly, this technology has the potential to support core policy areas, including monetary and financial stability. Use cases span from inflation forecasting to macroeconomic modelling, detection of macro-financial distress and development of stress tests (Araujo et al (2024)).

Yet, the number of AI applications in production remains relatively low to date. Most reported use cases are experimental, with few being fully deployed for day-to-day operations. Key reasons include the still limited IT infrastructure and in-house expertise. Another explanation is that most exploratory work is being conducted within business areas, while production-grade applications often require institution-wide coordination involving various stakeholders (eg functional, IT and security departments). However, this picture may change rapidly as central banks’ IT infrastructure and governance arrangements will mature progressively to respond to the growing interest in AI.

With a growing number of AI applications being considered, central banks are increasingly implementing adequate and robust AI governance to enable effective use of this technology aligned with organisational goals and risk management standards.

The primary focus in this context has been on data management, organisational, policy and risk frameworks. There is increased recognition that comprehensive governance frameworks can help address complex AI-related business needs more consistently and effectively, by developing synergies and avoiding overlaps or inconsistencies. Fortunately, central banks can draw on their extensive experience on data governance by incorporating solutions like comprehensive data management systems, metadata registries, common vocabularies, statistical standards and efficient data exploration (Križman and Tissot (2022)). Second, central banks are setting up or adapting existing organisational structures for supporting AI implementation. Solutions range from centralised steering committees to hub-and-spoke systems or fully decentralised approaches by business lines. Third, adequate guidelines and policies are being developed, such as for defining the responsible use of AI systems, documenting methods and processes, and ensuring the accuracy and reliability of outputs. Finally, risk management is deemed critical to assess risks and conduct ongoing monitoring of AI systems, such as through regular audits, evaluation of safety measures, incident response procedures and business continuity plans.

The overall picture from the survey suggests, however, that governance of AI is still in its early phase, particularly for central banks located in emerging market economies. Indeed, most AI/ML use cases have been implemented on an ad hoc basis by the various business areas. Such a decentralised approach provides greater agility and speed in implementing tailored solutions, reducing the risk of having overly ambitious plans without delivering concrete business value. Yet, the heterogeneous proliferation of use cases across the organisation may lead to a sub-optimal deployment of AI, by potentially limiting cross-area experience sharing and reinforcing functional “silos”. Further, it may pose difficulties to mitigate the various risks associated with this technology, such as privacy protection, cyber security weaknesses, skills shortage and ethical biases – all of which are key concerns for central banks that can be arguably best addressed comprehensively at the overall institution level.

While central banks already have substantial experience in developing comprehensive IT and data platforms (IFC (2020)), the integration of AI systems into their existing infrastructures appears to have generated multiple challenges.

First, the development and deployment of AI-based tools is requiring substantial investments in high-performance computing infrastructure. Related issues also include soaring energy consumption and large carbon footprints. Second, another challenge is the need for scalable data platforms. Obviously, AI applications demand powerful IT infrastructures to process vast amounts of data, while also ensuring reliability, availability and performance. They also necessitate platforms that handle unstructured data types, such as text, images, audio and video. As a result, a growing number of central banks are investing in modern data storage solutions.

The above considerations would suggest that outsourcing computational infrastructure to the cloud can be a solution for central banks to further advance AI adoption but there are limitations. Cloud services can indeed provide customised hardware on demand, with arguably lower upfront investments and maintenance costs. However, relying on cloud services poses a number of risks if not adequately managed, such as data privacy and security. Data sovereignty is another concern, especially when hosting facilities are located outside the central bank’s reach, potentially raising legal and geopolitical risks. Reflecting these aspects, central banks appear in practice to prefer on-premises cloud, allowing them to manage their data infrastructure in private centres.

Another trade-off is whether to develop customised software solutions or use off-the-shelf products. The former, typically developed in-house, are arguably better tailored to user requirements but may require significant investments. By contrast, off-the-shelf applications curated by third-party vendors can be quicker to deploy and easier to maintain. Yet, proprietary solutions may expose central banks to increased third party dependency as well as offer reduced scalability, adaptability and flexibility to address specific business requirements.

A possible way forward, increasingly explored by central banks, is to adopt open source approaches for AI and software. Open AI models can arguably be used, inspected or modified with more flexibility than closed models. They can also offer greater portability compared to closed source ones. Nonetheless, choosing the open source approach would require more expertise and resources, for instance in terms of maintenance or integration with legacy systems. Reflecting these trade-offs, central banks have in practice adopted a hybrid approach, implementing both open and closed source AI models.

Making the most of the opportunities offered by AI calls for establishing a clear roadmap ahead, focusing on four key areas.

First, securing the quality of reference information has emerged as a key priority, not least because AI-generated outputs are shaped by the quality of their input data. In practice, this entails strengthening data documentation and transparency, for example through version control, persistent identifiers, and open, findable and reusable data (US DoC (2025)). Another solution is to make the information machine-readable and actionable, for instance by using interoperable standards and disseminating AI-relevant information to help users to better understand the underlying sources of the models used. Moreover, as producers of official statistics, central banks can leverage their experience to play a data curator role in the broader data ecosystem along with statistical offices and international organisations. Relevant initiatives may include setting guidelines, identifying, monitoring and closing data gaps, and contributing to global data frameworks (UN HLAB-AI (2024)).

Second, facilitating access to and adequate sharing of high-quality and trustworthy information can also help address the risk of data bottlenecks, noting that AI needs for accurate information are constantly expanding. Central banks have extensive experience in making statistical information available to large audiences, both within the organisation, the statistical system and the broader data ecosystem. They are also contributing to key international initiatives to facilitate cross-border exchanges of data, such as in the context of the G20 Data Gaps Initiative to foster adequate data sharing and access, especially to private and administrative sources (IMF et al (2023)). Another development could be the setup of national and global data libraries to make public data sets more accessible to all users, including humans and machines. Taken together, these efforts call for strengthening the partnerships between central banks and statistical offices, but also with other stakeholders, including private actors which hold a growing share of alternative – especially unstructured – data.

Third, making the most of AI opportunities is a good reminder of the importance of modernising data processes and infrastructures, not least to facilitate the use of novel types and the linking of multiple information sources. Concretely, this may imply further efforts to adapt existing IT systems to store, integrate and protect diverse and vast amounts of information, for instance in dedicated data lakes and hubs. It also calls for enhanced interoperability of data processes and systems, by adopting statistical standards – such as the Statistical Data and Metadata eXchange (SDMX) standard (IFC (2025b)), promoting unique identifiers and linking data and metadata registers at the national and global levels.

Fourth, supporting the development of AI calls for building adequate expertise, both within the organisation and in the larger population. Central banks can already draw internally on their experience in analysing, managing and producing data. They can also leverage their multidisciplinary teams, which are typically proficient in IT, statistical and data techniques. Other solutions include offering specialised career tracks and promote internal knowledge by setting up AI-specific training curricula for staff. Looking across the broader ecosystem, the increased ability of wider audiences to access new types of data and use innovative techniques underscores the need to educate users and ensure adequate AI awareness and literacy. Options include developing programmes to support upskilling as well as offering methodological guidance and tailored communication to users.

Araujo, D, S Doerr, L Gambacorta and B Tissot (2024): “Artificial intelligence in central banking”, BIS Bulletin, no 84, January.

Financial Stability Board (FSB) (2017): Artificial intelligence and machine learning in financial services – market developments and financial stability implications, November.

International Monetary Fund (IMF), Inter-Agency Group on Economic and Financial Statistics and FSB Secretariat (2023): G20 Data Gaps Initiative 3 workplan – People, planet, economy, March.

Irving Fisher Committee on Central Bank Statistics (IFC) (2015): “Central banks’ use of and interest in ‘big data’”, IFC Report, no 3, October.

——— (2020): “Computing platforms for big data analytics and artificial intelligence”, IFC Report, no 11, April.

——— (2022): “Machine learning in central banking”, IFC Bulletin, no 57, November.

——— (2025a): “Governance and implementation of AI in central banks”, IFC Report, no 18, April.

——— (2025b): “SDMX adoption and use of open source tools”, IFC Report, no 17, February.

Križman, I and B Tissot (2022): “Data governance frameworks for official statistics and the integration of alternative sources”, Statistical Journal of the IAOS, vol 38, no 3, August, pp 947–55.

Kwon, B, T Park, F Perez-Cruz and P Rungcharoenkitkul (2024): “Large language models: a primer for economists”, BIS Quarterly Review, December.

United Nations High-Level Advisory Body on Artificial Intelligence (UN HLAB-AI) (2024): Governing AI for humanity – final report, September.

United States Department of Commerce (US DoC) (2025): Generative artificial intelligence and open data: guidelines and best practices, January.